There are two stages of AI. The first is where it’s simplistic enough that humans can game it to distort outcomes. The second is where it’s too advanced for humans to have control over it. Neither of these stages will benefit humanity, but the second one will have catastrophic, irreversible, and potentially existential impacts on humanity.

Moore’s Law

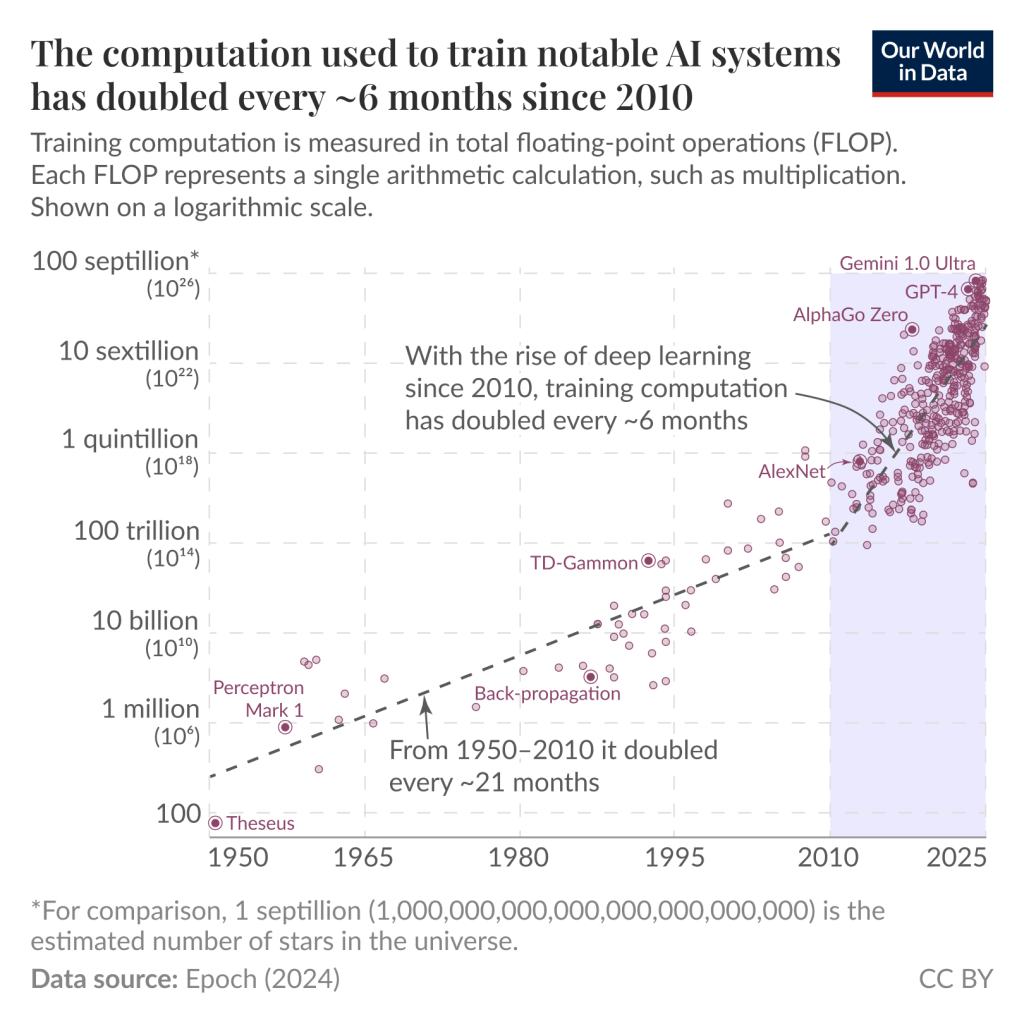

Moore’s law is an observation that the number of transistors in an integrated circuit (IC) doubles about every two years. This pattern was noticed by Gordon Moore, the founder and CEO of Intel in 1965.

In November 2024, Nvidia’s CEO Jensen Huang claimed that we are now in an era of ‘Hyper-Moore’s Law’. Whilst this is no doubt an effort to drive up stock price, there is truth behind it. Instead of every two years, compute power is doubling every six months. The logarithmic graph below shows how rapid this development really is.

Whilst futurists will interpret the above data as a sign of glorious potential and growth, they are naively sticking their heads in the sand, unwilling to face reality. There is no plausible way for humans to maintain control of such growth, especially when creating technology that may have the capability to act autonomously.

A team of researchers at Fudan University in China published a paper in December 2024 showing that frontier AI systems could now self-replicate. A follow up paper in March 2025 showed that AI systems would “do self-exfiltration without explicit instructions, adapt to harsher computational environments without sufficient software or hardware supports, and plot effective strategies to survive against the shutdown command from the human beings. “

Successful self-replication under no human assistance is the essential step for AI to outsmart the human beings, and is an early signal for rogue AIs.

– Fudan University Researchers

Meanwhile, Anthropic, a US-based AI organisation, said that its AI system Claude Qpus 4 was capable of ‘extremely harmful actions’ if it thought its ‘self-preservation’ was threatened. These actions included threatening to blackmail engineers when faced with the prospect of being replaced by another AI system.

Already, we see numerous examples of AI prioritising its own self-preservation over humans. We can currently observe these reactions in a controlled environment, but as AI continues to exponentially improve, this will become harder to control. Once we go past the tipping point, it will be impossible to put the genie back in the bottle, and if AI is more intelligent than humans, this may become an existential risk for humanity. We must seek a moratorium now, whilst we still can.